Why HR Needs AI Literacy: Understanding,

12/16/20254 min read

Artificial Intelligence (AI) hiring algorithms are now common in recruitment. They automatically screen, score, and rank candidates based on the data they submit through their CV or via application forms. But they can also amplify bias or violate the EU AI Act if misused. This article explains how these systems work, the risks they pose, and what HR professionals must do to ensure fairness, transparency, and compliance. It also highlights why building AI literacy through targeted training is now essential for responsible and lawful recruitment.

TL; DR

AI Literacy: How AI Hiring Algorithms Work

The most important thing to understand is that AI Hiring algorithms serve different purposes that map the tasks the HR professional used to, once do manually.

Let’s consider targeted recruiting, the strategy of proactively and specifically searching for candidates with certain skills, experience, or characteristics to fill roles, rather than just waiting for applicants.

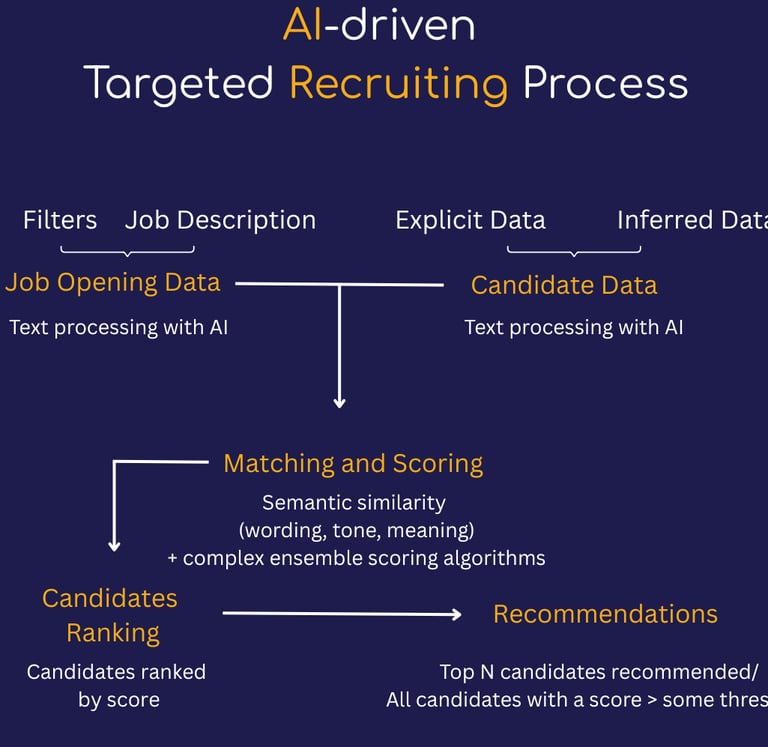

Platforms making targeted recruiting seamless are heavily reliant on data collection and AI. When a recruiter provides a job description the expectation is to get a ranked list of the most suitable candidates. Below is an illustration of the process behind the scenes.

Data processing: The job description is processed using AI algorithms and text is converted into numerical values, preserving the meaning of the text.

Information from candidate profiles come from two sources:

Specific information provided by the candidate

Inferred information by profiling AI algorithms (through activity tracking on the platform and interaction with other users or companies) with AI

The candidate information is also processed with AI, converted to numbers. \

Matching: During the matching phase, the opening and candidates are being compared and scored based on their similarity. Similar wording, skills, tone/voice, all count towards a candidate being a match for the job or not. Matching can be a very complex process, with algorithms scoring candidates from multiple perspective and ensemble scoring aggregation to provide a final score.

Ranking: The candidates are then ranked from the highest to the lowest score, but only the top N people are visible recommendations to the HR professional.

Common Risks and Breakpoints

While AI boosts efficiency, several risks may impact fairness and effectiveness:

Data Quality Issues: Missing relevant candidates can result in unfair treatment. Here are some real life situations my clients encountered:

If candidate profiles are not up to date they will not be short listed.

If the candidate submits their CV without also filling in the application form, but the AI is set to consider the application form as the primary source of candidate data.

Along the same lines, if the application form and the CV contain misaligned information.

If the CV is highly stylized and the AI parsing the document can’t handle the font or format.

The job description is too generic making identifying relevant candidates “a needle in the haystack” challenge.

Overreliance and Blind Trust: Also known as automation bias, this can lead to disqualifying “unicorn” candidates who don’t fit the past patterns.

A highly qualified professional, with unique abilities and professional experience would be considered an outlier and dismissed.

Bias Amplification: Algorithms learn from past data, which can contain biases (e.g., gender, ethnicity). This risks repeating discrimination and result in fines if the HR professional isn’t trained to spot these cases.

Regularly check if candidate pools and hires show unintended demographic skews.

Maintain Human-in-the-Loop; always review AI recommendations yourself or with your team before making decisions.

Lack of Transparency: Complex AI models often don’t explain why candidates are scored certain ways, making it hard to justify decisions. How you can change that?

Demand Transparency from your vendor. Insist on tools that offer explainability, simple reasons why an AI prefers candidate A over B.

Understand that AI outputs are recommendations, not final judgments. Ask vendors for details on how their algorithms work.

Boost AI literacy across HR so everyone understands AI’s strengths and limits.

EU AI Act: Why Your Role Is Legally Important

The EU’s AI Act classifies hiring algorithms as high-risk AI systems because they impact fundamental rights such as equal opportunity to work and non-discrimination. Under this law, the companies using such tools must:

Ensure the AI systems their HR department uses meet strict requirements for data quality, transparency, risk management, and human oversight.

That the HR professionals handling these tools have the sufficient level of AI Literacy to use them responsibly and in accordance with the law.

Failure to comply can lead to massive fines for companies (up to €30 million or 6% of global turnover).

How your AI Literacy can safeguard your organization

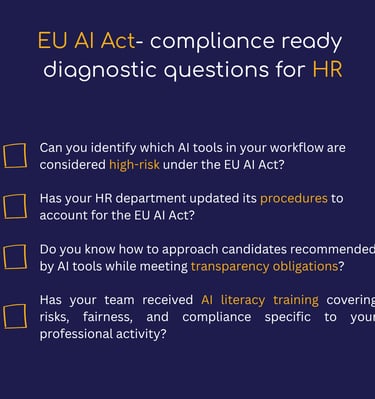

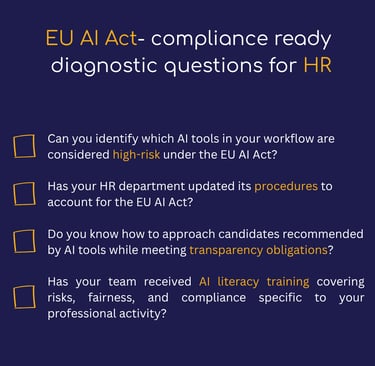

By asking the following questions, any HR professional can set the first brick towards compliance and safeguarding their organization from serious damages.

If the answer is NO, your organization is at risk. Addressing these early will help avoid fines and reputational harm while fostering ethical, transparent AI use within your HR department.

Accountability And Enforcement In The EU

How is accountable for non-compliance with the EU AI Act?

The organization using the AI hiring system is primarily responsible for ensuring compliance with the EU AI Act. In case of misuse or non-compliant use by its employees, the organization is liable. This is why as an HR professional, you must feel equipped with the necessary knowledge and critical thinking when using AI tools in your daily activity.

Who enforces the EU AI Act?

Enforcement falls to designated national authorities in EU member states, such as data protection agencies and AI regulators. For example, in Spain there is The Spanish Agency For The Supervision Of Artificial Intelligence.

Who can complain or trigger investigations under the AI Act?

Candidates who feel unfairly treated or discriminated against can file complaints with these authorities, triggering audits or penalties if non-compliance is proven.

Final Thoughts: Your Essential Responsibility

AI is a powerful tool, but responsibility starts with you as HR professionals. Understanding AI literacy is not optional; it’s essential to protect candidates’ rights and safeguard your organization’s reputation and legal standing.

By staying informed, insisting on transparency, maintaining human oversight, and collaborating closely with data scientists and legal teams, you can transform AI hiring from a risk-laden black box into a trusted partner.

If your HR team needs hands-on AI literacy training to comply with the EU AI Act and use AI responsibly, explore our AI Literacy for Professionals Course. Learn what AI is all about, how to critically evaluate AI outputs, detect bias, and maintain compliance confidently.

THANK YOU for visiting us!

Your data stays safe with us. No spam, unsubscribe anytime.

Terms

Connect

Sponsored by giraffa-analytics.com

© 2025. All rights reserved.